The Cost of Choosing the Wrong Tech Stack – A Lesson from a Tech Lead

Our Tech Lead shares how choosing the wrong tech stack can cost teams time and morale. Read the full story for the real-world context, the final solution, and some key lessons.

TL;DR

-

The Perfect Architecture Trap: A fintech startup chose a complex tech stack for perceived performance benefits but crashed from 40 to 20 story points velocity because the architecture was overly complex for their actual needs and team capabilities.

-

Root Causes: Choosing unfamiliar technologies without team expertise while implementing microservices architecture created operational chaos that solved tomorrow's scalability problems while creating today's velocity problems.

-

The Fix: Refactored to a simpler architecture aligned with team strengths, resulting in 60% velocity improvement, simplified operations, and restored team morale within 6 weeks.

-

Key Lesson: Team expertise trumps technology hype - validate with proof-of-concepts first, match complexity to organizational maturity, and optimize real bottlenecks (not theoretical ones) while being transparent with stakeholders about technical decisions' business impact.

The "Perfect" Architecture

In early 2021, I worked at a fintech startup where we faced an exciting challenge: building a fintech application from scratch. We designed what seemed like the ideal system – microservices with domain-driven design, powered by Go. Our reasoning seemed sound:

-

Performance First: Fintech demanded speed for handling thousands of requests so we chose Go for its fast runtime.

-

Microservices for Scale: Each service had its own database to eliminate bottlenecks

-

Domain-Driven Design: Complex business rules needed clean abstractions and extensive testing

Here's what we should have done first: built a two-week proof of concept with a single critical user journey. Instead, we launched into full development with confidence. Two months later, we were drowning.

When Reality Hits

Our sprint velocity crashed from 40 story points to barely 20. Features that should have taken 6-9 story points were consistently taking 12-15 points. What went wrong?

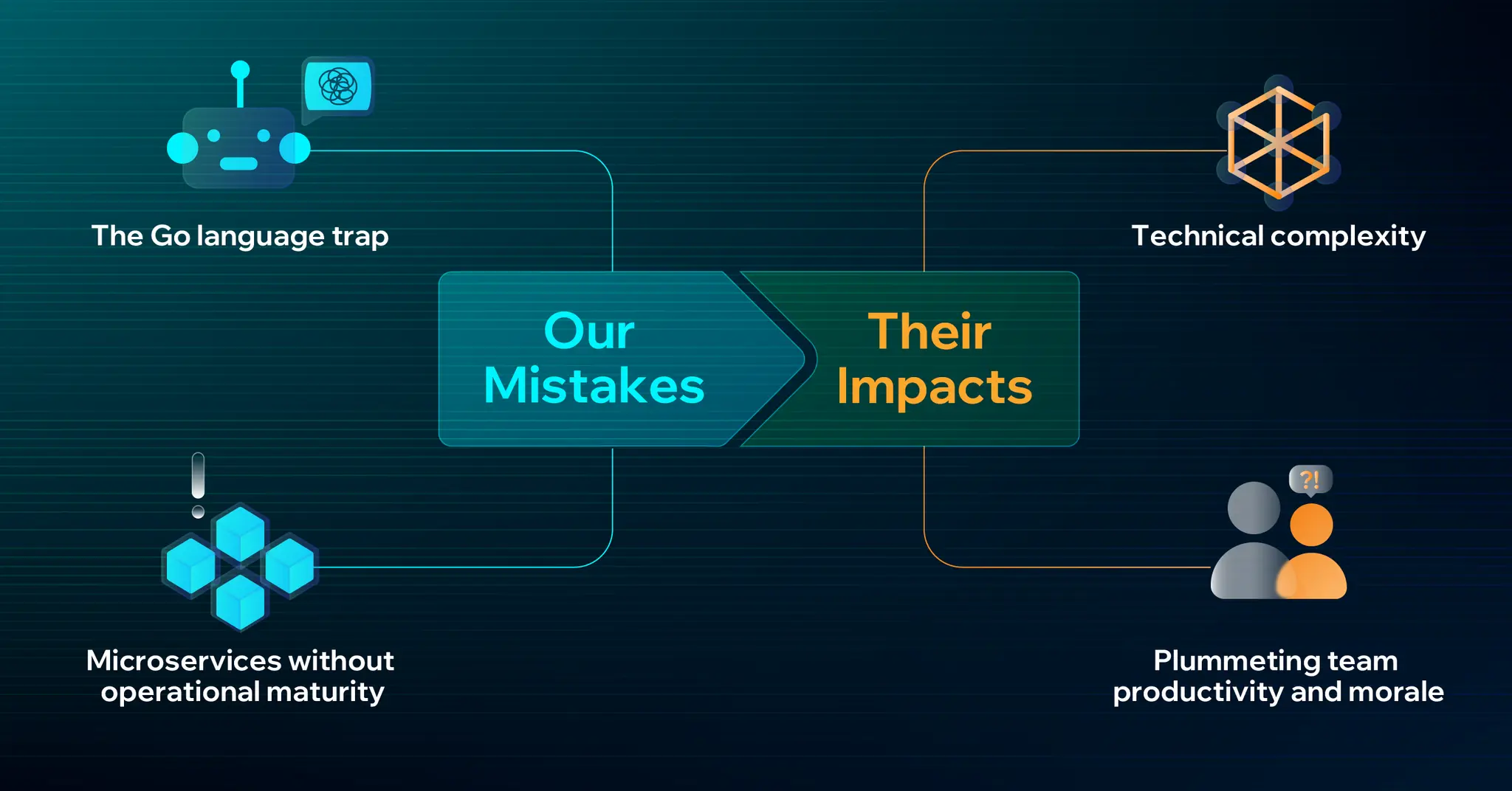

The Go Language Trap

The biggest red flag I missed: we had JavaScript veterans but no Go experts, yet we chose Go anyway. This should have been a hard stop.

Go in 2021 was deceptively simple – too simple for complex domain logic. Without generics (which didn't arrive until Go 1.18), we found ourselves writing repetitive boilerplate code. The multiple layers of DTOs – from controller to application service to domain model and back became a nightmare of manual mapping. Every small change rippled through endless conversion functions.

Any experienced Go developer would have warned us about the boilerplate hell for complex domain models. We were learning a new language while trying to implement complex patterns – a recipe for disaster.

Microservices Without Operational Maturity

Our 10 microservices seemed elegant in theory, but created operational chaos:

-

10 Databases, 10 Migration Scripts: that consumed enormous developer time.

-

Transaction Nightmares: How do you maintain data consistency across 10 different databases? We built custom middleware to track transaction states and handle rollbacks – a massive engineering effort that added months to every feature.

-

Communication Complexity: Services needed both synchronous (gRPC) and asynchronous (message queues) communication, requiring extensive boilerplate and making testing incredibly difficult.

We were solving tomorrow's scalability problems while creating today's velocity problems. The classic rule is "start with a modular monolith, extract services when you have real pain points." We did the opposite.

Not to mention that "thousands of requests" for a pre-investment fintech startup was pure over-engineering. We were building for Netflix scale when we needed to focus on getting our first 100 users.

The Hidden Costs of Wrong Decisions

Every architectural decision multiplied our complexity:

-

Testing a single transaction across multiple services became an integration nightmare

-

Debugging issues required tracing through multiple service logs

-

Simple changes required coordinating deployments across multiple services

But the technical pain wasn’t the worst of it. The people cost was higher:

-

Onboarding new developers took weeks just to grasp how services interacted

-

Knowledge became siloed, code reviews slowed down, and team morale dropped

-

Senior developers were spending days on tasks that should have taken hours.

-

One team member told me bluntly: "I feel like I'm fighting the language instead of solving problems."

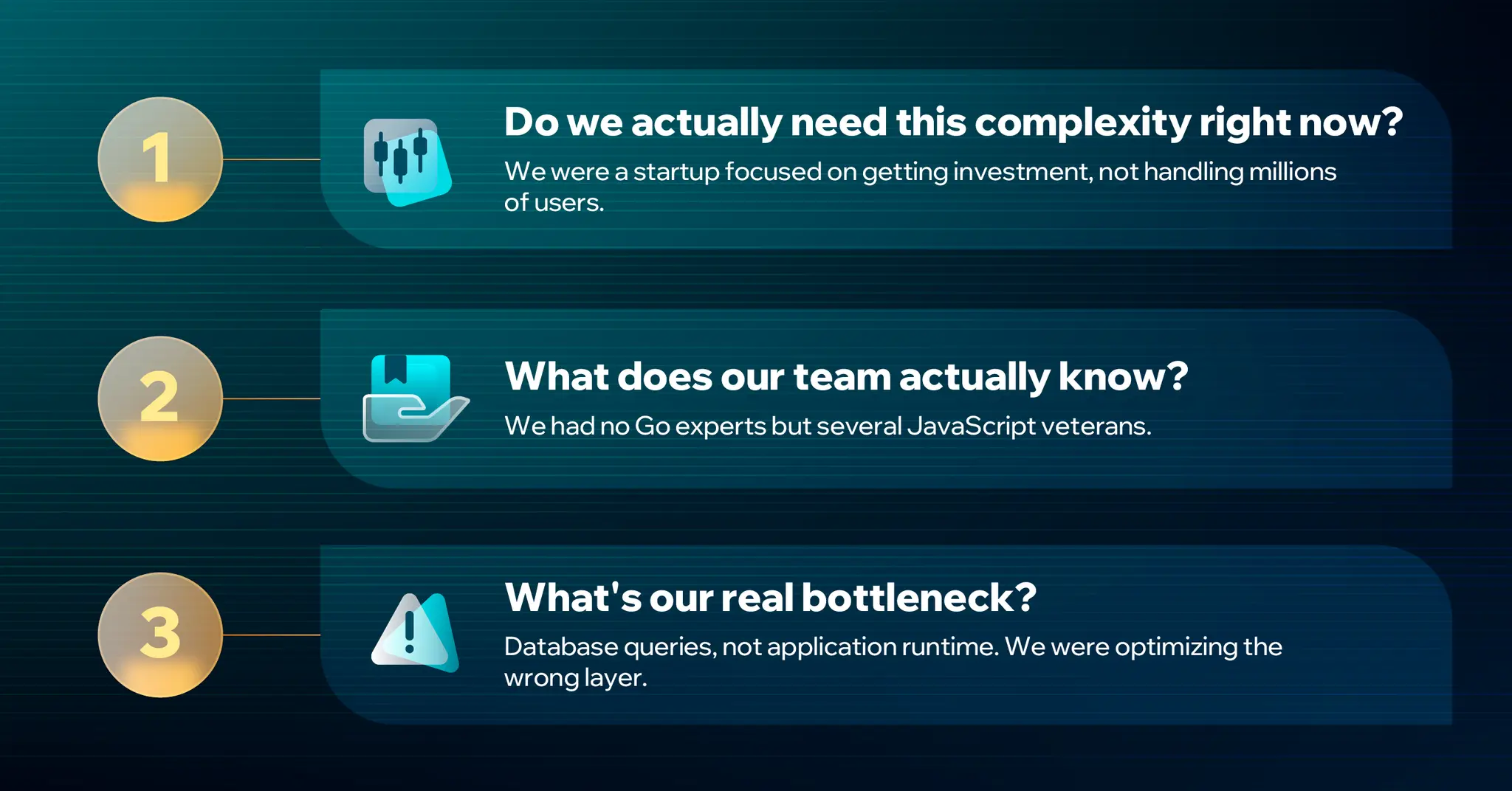

Asking Better Questions (And Having Hard Conversations)

When sprint velocity halves, that should trigger immediate investigation – not months of struggle. I should have called a technical spike after the first month.

We stepped back and asked fundamental questions that we should have started with:

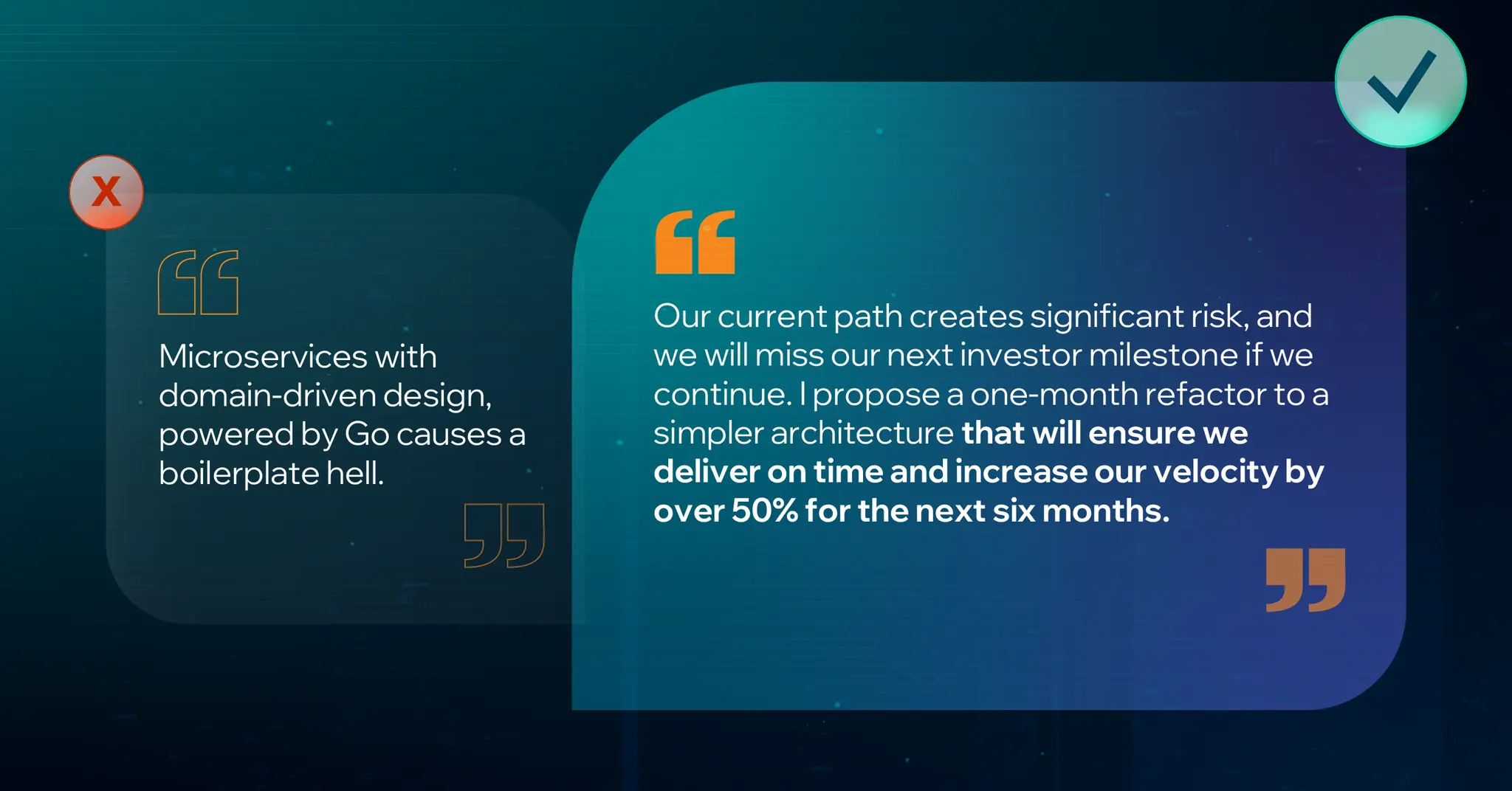

The hardest conversation was admitting to stakeholders that our "modern, scalable" architecture was actually slowing us down. I had to explain that we needed to invest a full month in refactoring to hit our roadmap targets. I framed it as risk mitigation: continue struggling and miss our investor milestones, or invest now to ensure consistent delivery for the next six months.

The real lesson: I should have handled the sunk cost fallacy head-on. The architecture was fundamentally wrong, and trying to "fix" it would have been throwing good money after bad.

The Solution of Strategic Simplification

We made the hard decision to refactor to a Node.js monolith using NestJS but this time, we built incrementally:

Team Expertise Alignment

Leveraging our JavaScript experience eliminated the learning curve, letting us focus on business logic instead of language quirks. This wasn't just about technical efficiency. it was about team morale and confidence.

Modular Monolith Architecture

-

Single Database: Transactions became simple again.

-

Clear Domain Boundaries: We kept domain separation for potential future microservices migration, but without the operational overhead.

-

Reduced DTOs: JavaScript's native JSON handling eliminated layers of conversion code.

-

Simplified Testing: Unit and integration tests became straightforward.

Performance Strategy

Instead of distributed complexity, we focused on proven database optimization:

-

Intelligent caching strategies

-

Proper indexing

-

Connection pooling

-

Query optimization

We could always add sharding later if needed, then we'd solve actual performance problems, not theoretical ones.

Incremental Validation

This time, we built and deployed core features every week, just enough to test assumptions and get feedback quickly. This allowed us to validate each architectural decision with real usage data instead of theoretical discussions.

Small, tangible wins replaced uncertainty, helping us make smarter choices with less risk and more clarity.

The Positive Results

The change was transformative. Six weeks after the refactor:

-

60% Velocity Improvement: Features that once took 12-15 story points were now consistently delivered in 6-8 points.

-

Simplified Testing: Integration tests that were once nearly impossible became a routine part of our workflow.

-

Developer Happiness: The mood shifted entirely. The team was energized and excited to be building products again, not fighting tools.

-

Stakeholder Confidence: We delivered our next investor milestone two weeks ahead of schedule.

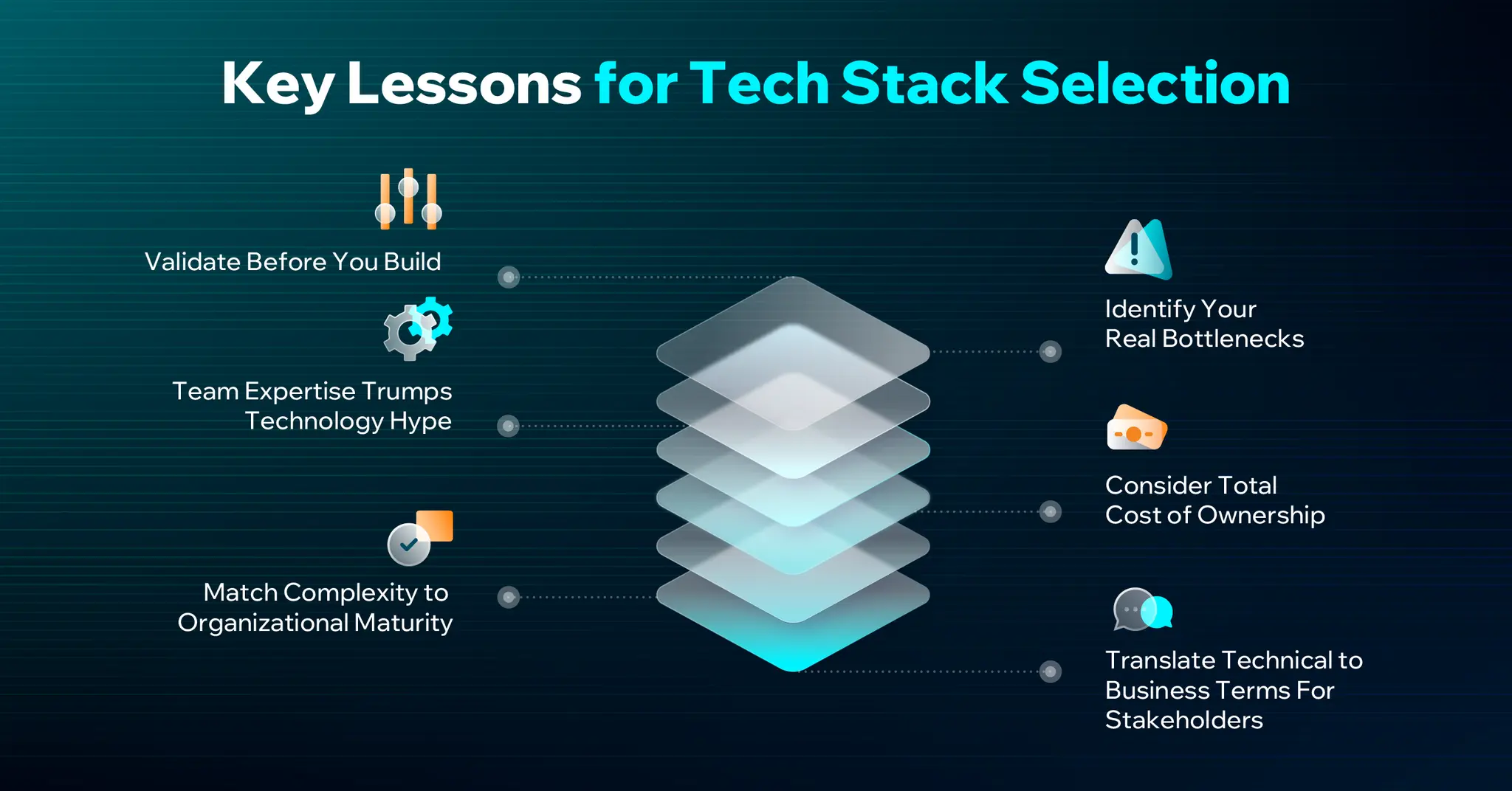

Key Lessons for Tech Stack Selection

This experience taught me invaluable lessons that I now apply to every project at CodeLink.

1. Validate Before You Build

Never design an entire architecture in meetings. Build a two-week proof of concept (PoC) that focuses on a single, critical user journey. Every system sounds elegant in a slide deck. The only real test is whether it works under real conditions.

Rule of thumb: If your proposed stack makes easy things hard, it will make hard things impossible.

2. Team Expertise Trumps Technology Hype

Your team's existing skills matter more than the latest trends. A "worse" technology that your team has mastered will outperform a "better" technology they struggle with.

We chose Go without any Go expertise, and paid for it with slow progress and burned-out developers. As a technical leader, your job is to set your team up for success, not to implement the most impressive architecture.

3. Match Complexity to Organizational Maturity

Microservices solve real problems at scale and with mature teams. Startups need velocity, not distributed systems complexity. Build for your current reality, not your imagined future. Start with a modular monolith. Extract services when you have real pain points, not theoretical ones. Complexity is a tax, don’t pay it early.

4. Identify Your Real Bottlenecks

We assumed our bottleneck was application performance when it was actually database queries and developer productivity. Optimize the constraints that matter.

When velocity drops significantly, stop and investigate immediately. Don't let teams struggle for months. Treat it as the emergency it is.

5. Consider Total Cost of Ownership

Every tech choice comes with a hidden price tag – some visible, some not. When evaluating technologies, go beyond performance and features:

-

Learning curve and hiring difficulty

-

Maintenance burden and update frequency

-

Testing and debugging complexity

-

Long-term scalability (both technical and organizational)

-

Team morale and productivity impact

6. Translate Technical to Business Terms For Stakeholders

When technical choices go wrong, your response matters more than the original mistake.

Stakeholders may not understand technical debt, but they understand risk, delays, and missed milestones. Be transparent about problems, frame technical debt in business impact terms, and provide clear success metrics for solutions.

Handle the sunk cost fallacy head-on. Sometimes the best decision is admitting the fault in the architecture and starting over.

Harry Nguyen

Technical Lead

Harry is a seasoned Technical Lead with extensive experience in both web and mobile application development. He specializes in software architecture and complex problem-solving, leading teams to deliver high-quality and innovative solutions. His work often involves cutting-edge technologies, including AI, to drive impactful results.