case study

Tanoto

Client

CodeLink

industry

HRTech

services

AI ML

Custom Software Development

Tanoto

Client

CodeLink

industry

HRTech

services

AI ML

Custom Software Development

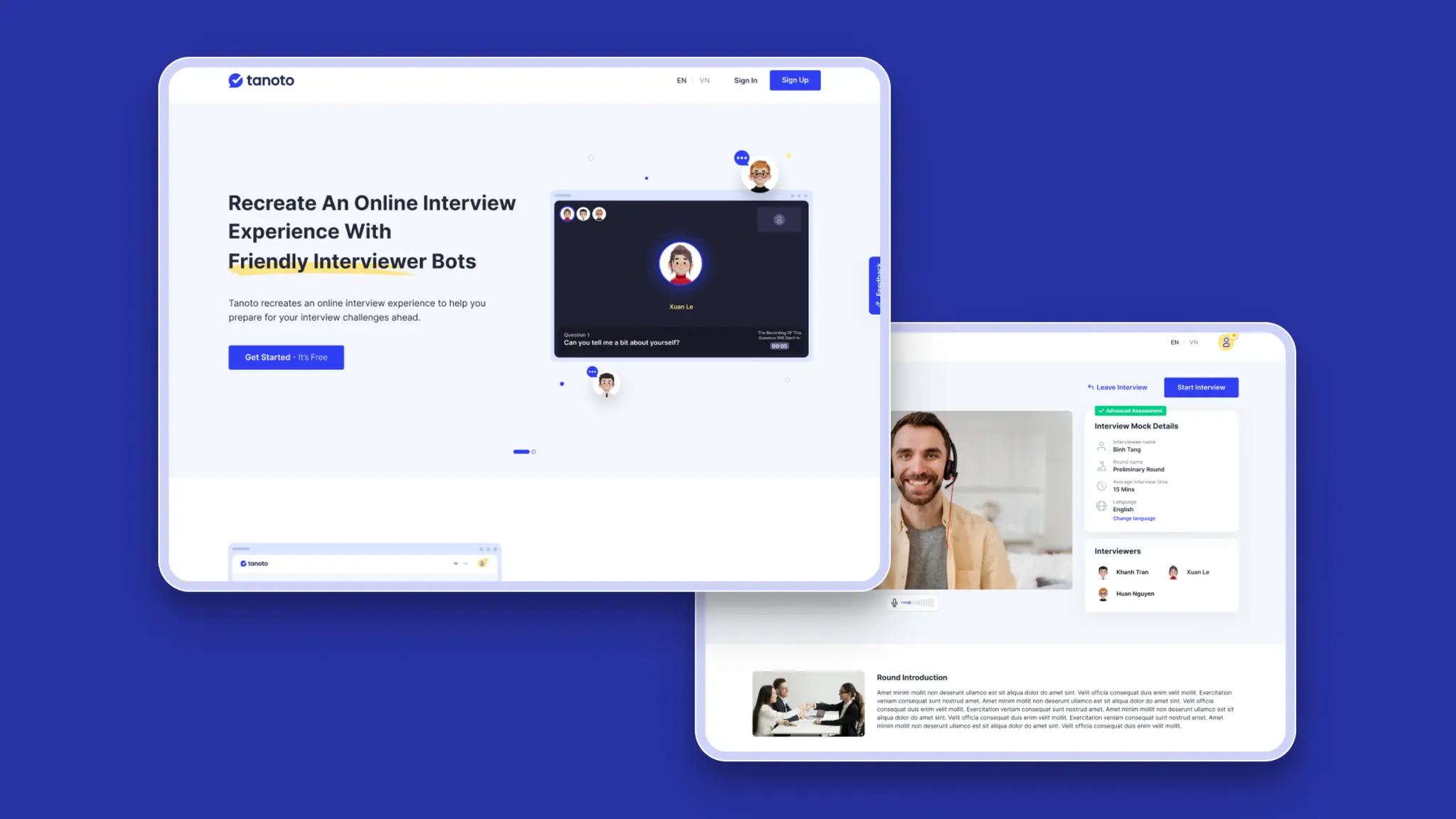

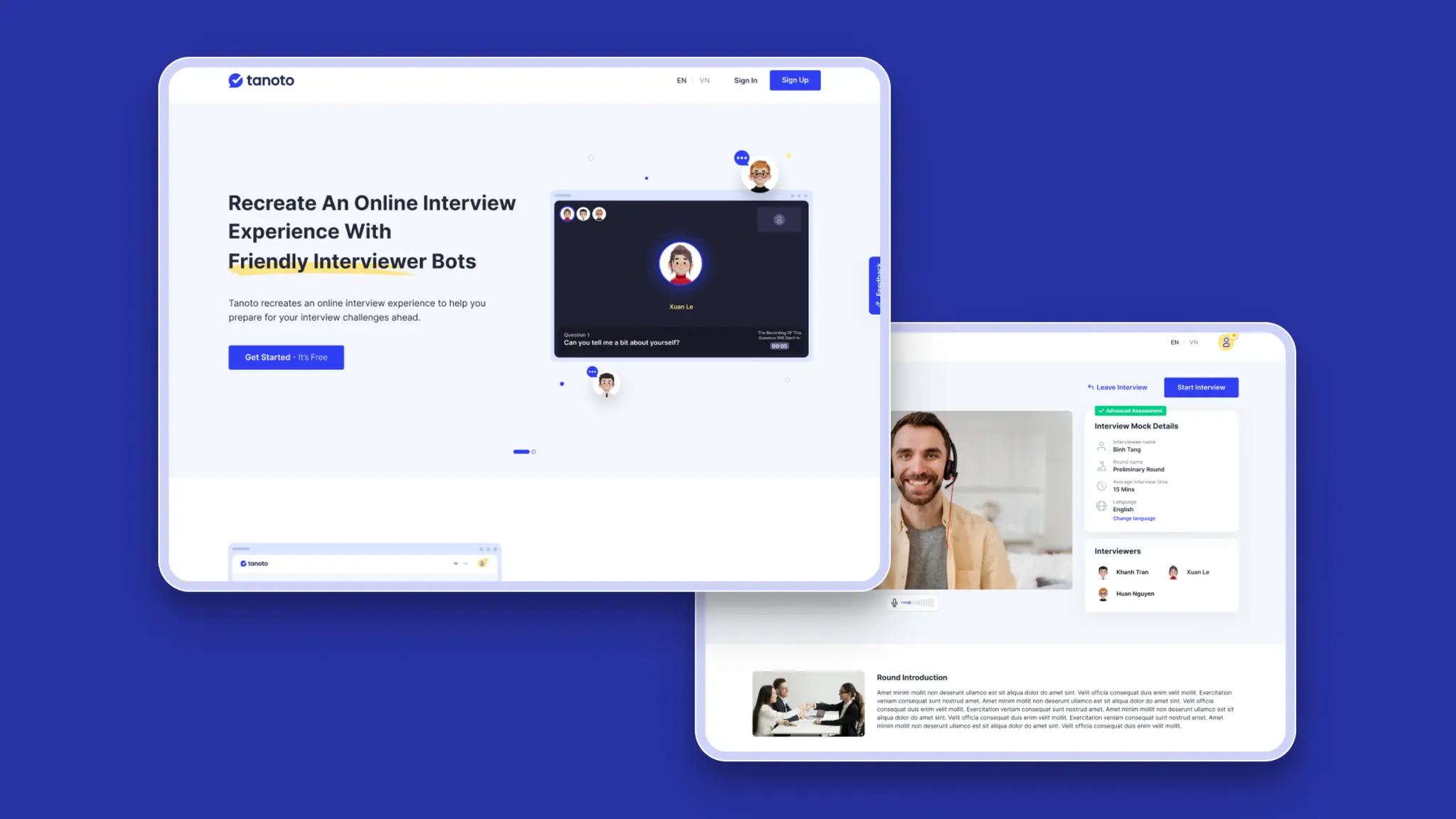

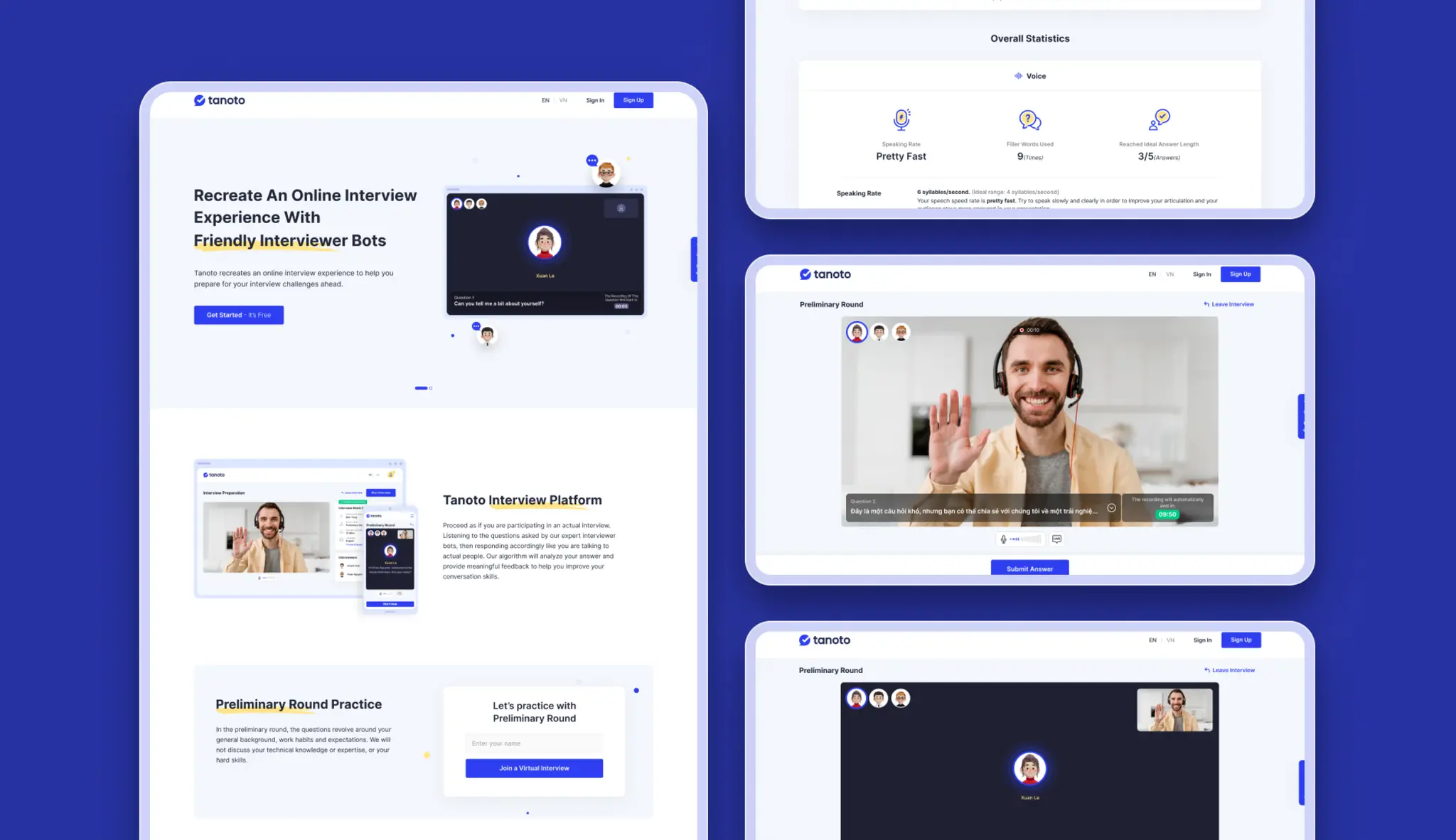

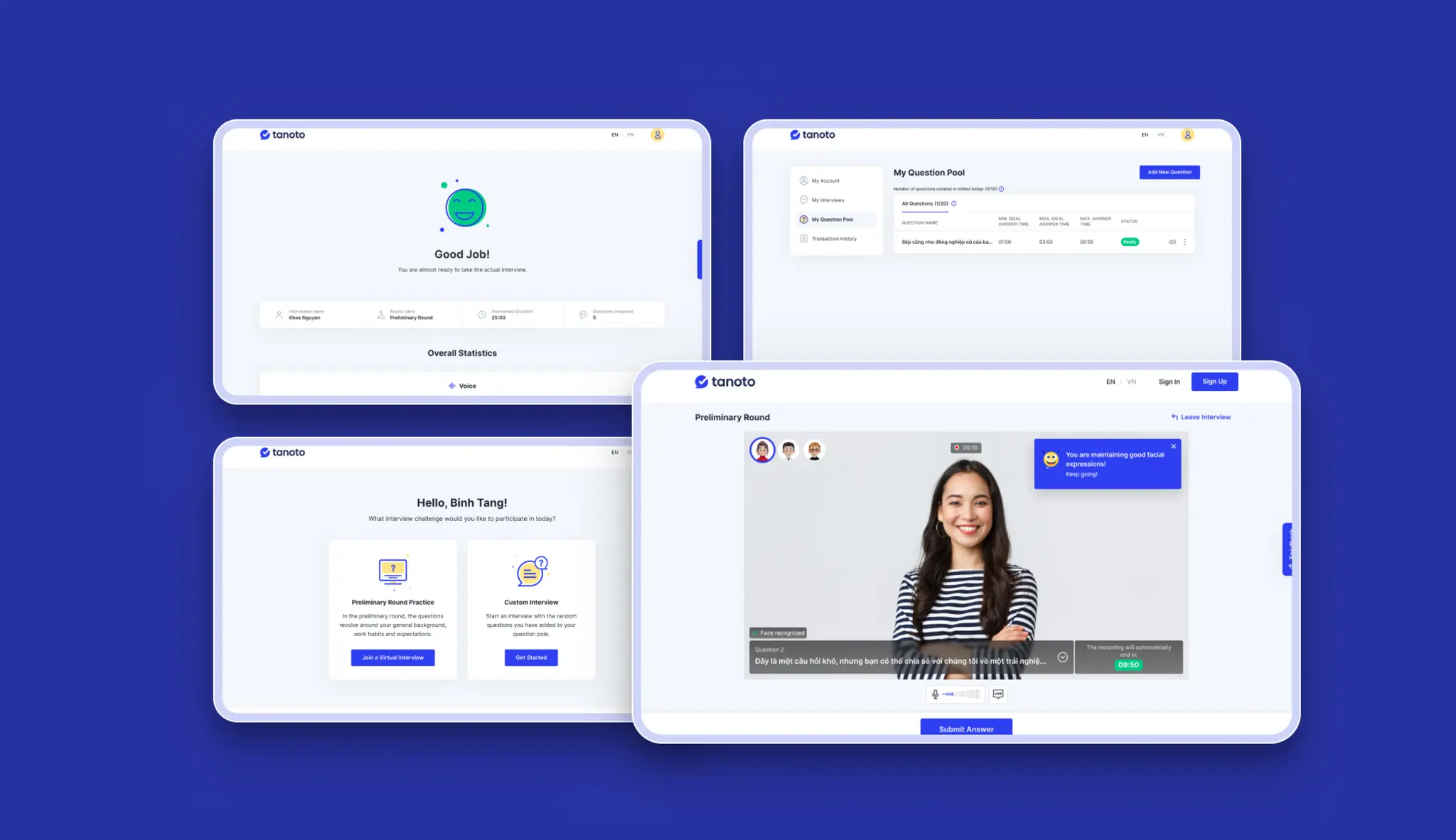

Tanoto is an app designed to help users practice interviews and receive feedback on their answers' content, pace, eye-tracking, and facial emotions.

Team Model

Technology

NextJS

NestJS

ReactJS

Python

Whisper

Face Landmark Detection

MediaPipe Face Detection

Emotion Classification

Text to Speech

Platform

How can we assist job applicants in improving their interview and presentation skills?

CodeLink had developed their own propriety text-to-speech and speech-to-text AI model. They wanted to run a design sprint to experiment with how they could turn the model into a commercial product. Stakeholders tasked CodeLink's internal teams with running a design sprint and proposing a final tested prototype. The prototype would then be built into an MVP product release.

The CodeLink internal team worked as a fully autonomous team to facilitate and run the design sprint, test the prototype, and build out the MVP followed by a fully functional V1 release of the product.

The MVP phase took 6 weeks to complete and release for beta testing. We then run another 6-week phase to implement basic authentication and profile management for the V1 release of the product.

To start the project, we conducted a 1-week virtual Design Sprint workshop. Our goal was to establish the product's goals, vision, and value proposition. We created a low-fi prototype and held in-person user testing to gain insights into user needs and how to meet them. Our tests validated the product concept, and we began development. To develop the app, the team used a home-grown Text-to-Speech solution and Whisper for Speech-to-Text. We researched and applied real-time face landmark tracking models, such as MediaPipe, and then researched and applied real-time emotion classification models. The final product was fully developed and released to the market.

Tags

Design Sprint

Autonomous Team

Artificial Intelligence

Machine Learning

Product Design

Product Development

Prototype Testing

We can help you get the details right.

Subscribe to receive the latest news on technology and product development from CodeLink.

CodeLink empowers industry leaders and innovators to build high-impact technology products, leveraging AI and software development expertise.