MCP under the hood

If you’ve been following the exciting developments in AI, you may have encountered the Model Context Protocol, or MCP. This article uses a real-time weather query via the Claude Desktop App to walk through how MCP works under the hood, step by step.

If you have been following AI development, you have probably heard about Model Context Protocol, or MCP for short. It is a simple protocol that empowers LLMs and extends their capabilities.

This article uses a simple real-time query example to show how MCP works under the hood.

Real-time query example

We implement a weather MCP server that allows users to query real-time weather conditions from any location using the Claude Desktop App.

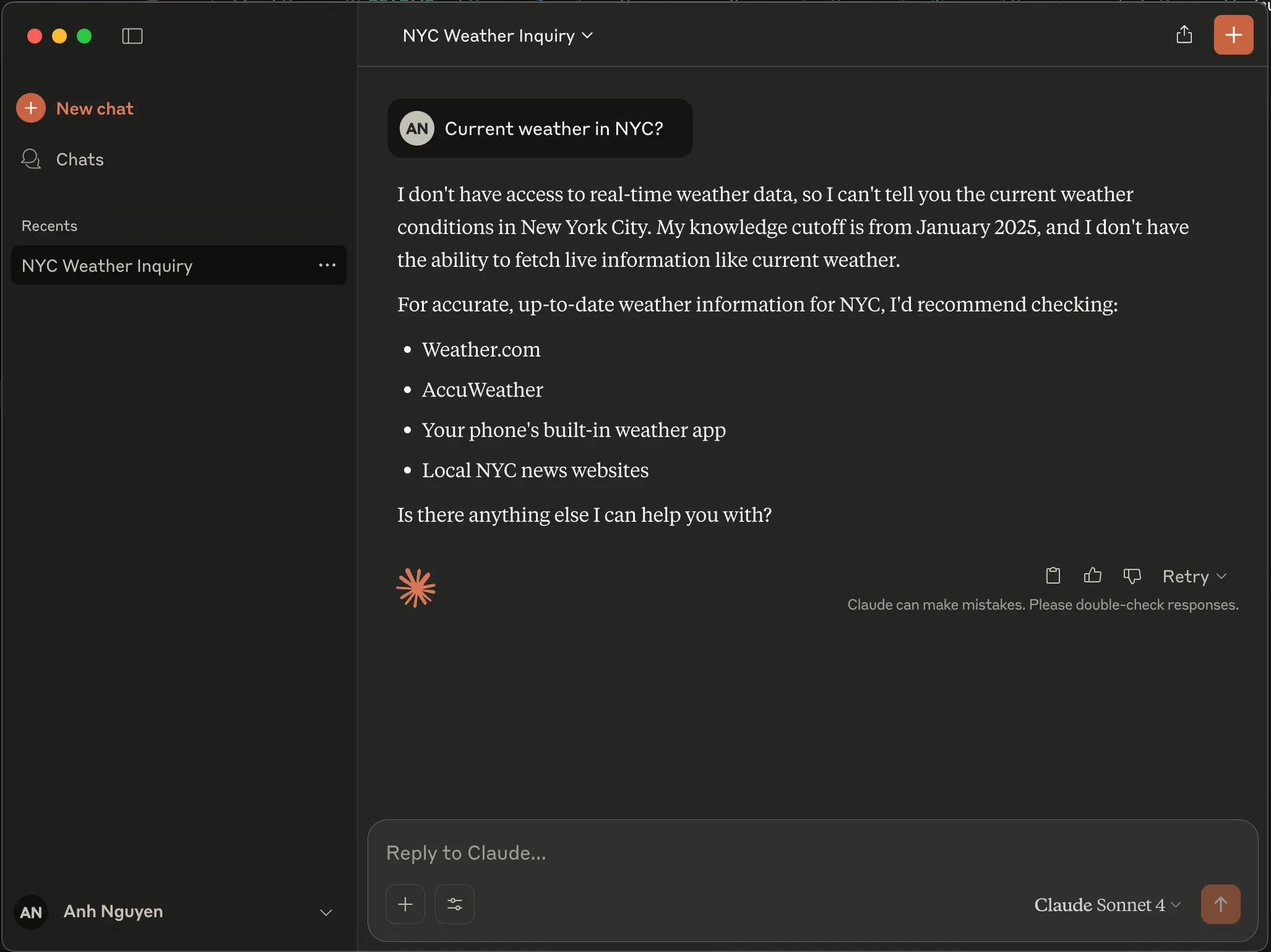

LLMs are incapable of querying real-time data, so if you ask it “Current weather in NYC?”, without MCP (and web search turned off), it’ll return something like this:

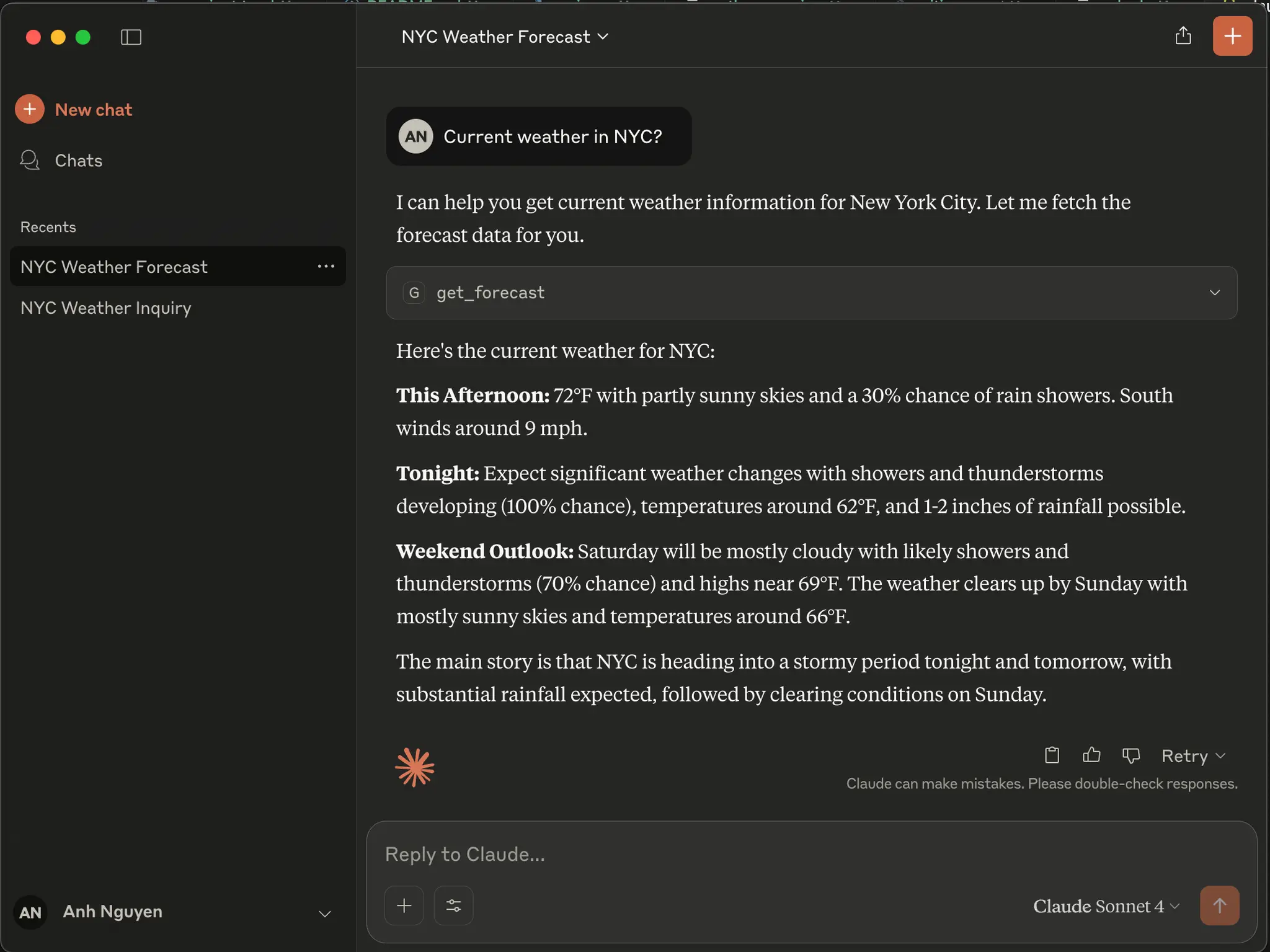

And with MCP, it can now answer the query by leveraging tools:

How does it work?

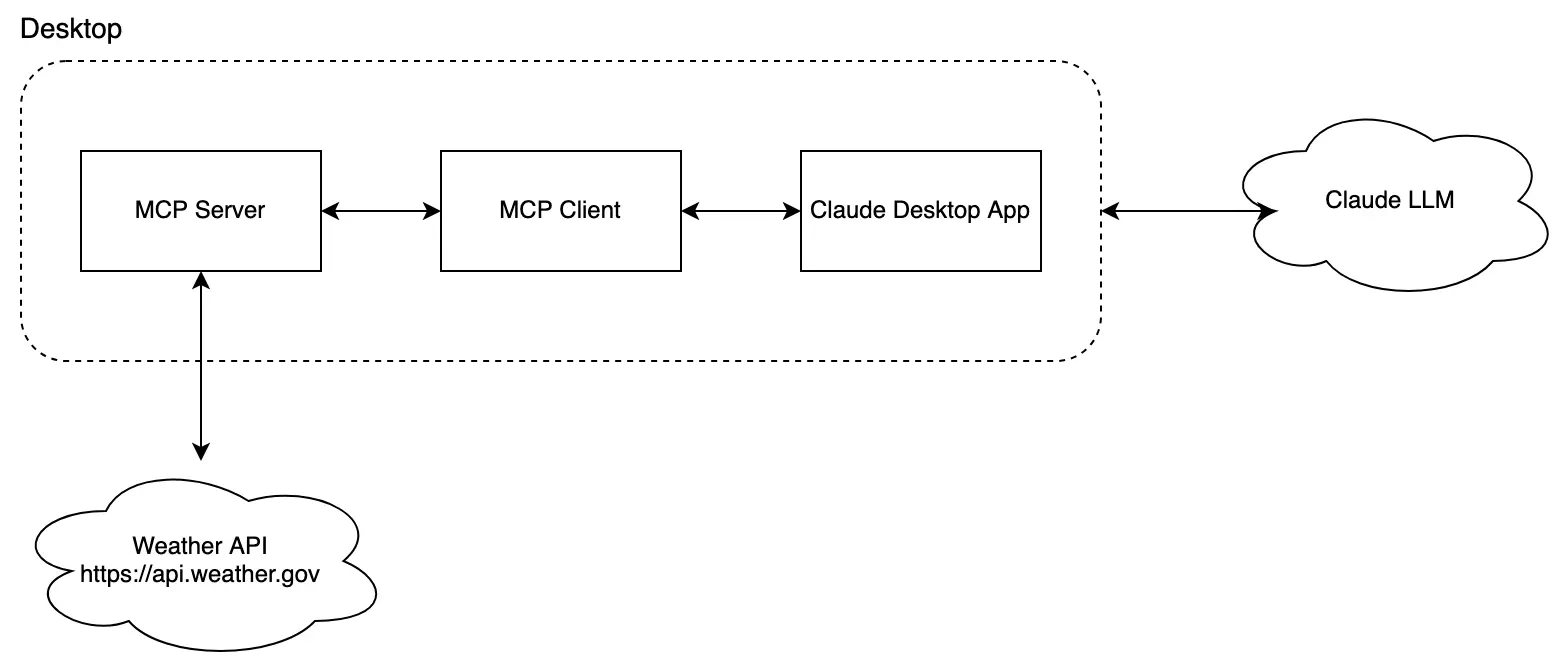

From a high level, there are several components:

It's important to note that in this example, the MCP Client and MCP Server both exist locally on the user's desktop. The Desktop Claude App is the MCP Client host, meaning that the MCP Client exists inside the app.

The MCP Server provides two tools to return real-time information:

get_alerts: Get weather alerts for a US state. It requires an argument,state- a two-letter US state code (e.g., CA, NY)get_forecast: Get weather forecast for a location. It requires 2 arguments,latitude- latitude of the location, andlongitude- longitude of the location.

For each tool, the MCP makes a call to its respective Weather API endpoint, which are just normal HTTP endpoints at https://api.weather.gov.

Under the hood, several steps are going on:

- The Claude Desktop gets a list of available tools, in this case,

get_alertsandget_forecast - Claude Desktop sends the user query to Claude LLM

- Claude LLM analyzes the available tools and decides which ones to use

- The Claude Desktop executes the chosen tools through the MCP server

- Weather API returns the real-time information

- The results are sent back to Claude LLM

- Claude LLM formulates a natural language response and Claude Desktop displays the final answer

A deeper view

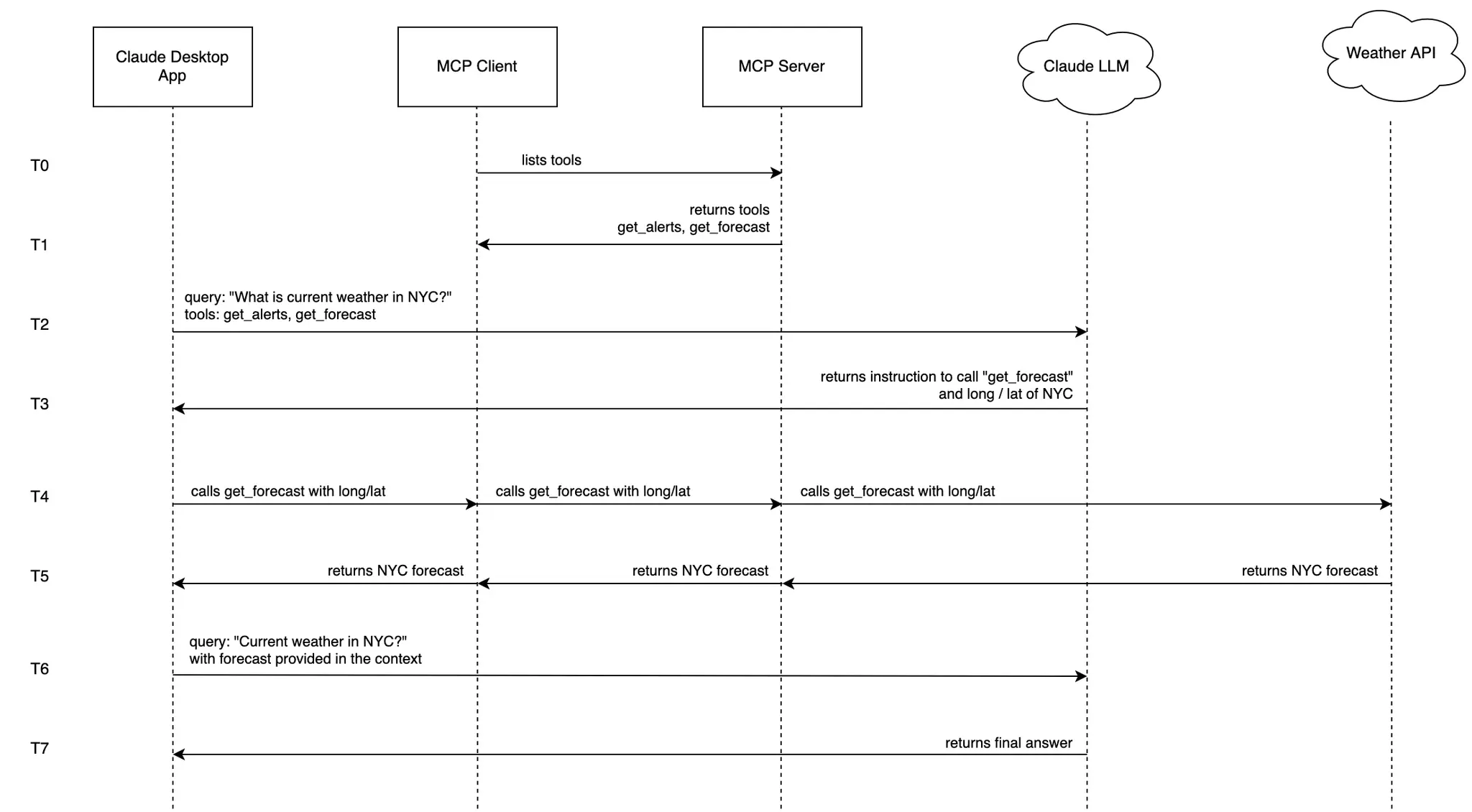

To understand how all these components coordinate, we'll use a request diagram. Please note that it has already been simplified to omit some protocol details.

Step 1: The MCP Client gets a list of available tools from MCP Server

The MCP Client establishes a connection to the Server and retrieves available tools. These tools are stored in the session and are structured as follows:

[

{

'name': 'get_alerts',

'description': 'Get weather alerts for a US state.',

'input_schema': {

'properties': {

'state': {'title': 'State', 'type': 'string'}

},

'required': ['state'],

'title': 'get_alertsArguments', 'type': 'object'}

},

{

'name': 'get_forecast',

'description': 'Get weather forecast for a location',

'input_schema': {

'properties': {

'latitude': {'title': 'Latitude', 'type': 'number'},

'longitude': {'title': 'Longitude', 'type': 'number'}

},

'required': ['latitude', 'longitude'],

'title': 'get_forecastArguments', 'type': 'object'

}

}

]

All information, such as tool name, description, and arguments, is defined on the server side. The MCP Server Python code looks like this:

@mcp.tool()

async def get_alerts(state: str) -> str:

"""Get weather alerts for a US state.

Args:

state: Two-letter US state code (e.g. CA, NY)

"""

# Implementation...

@mcp.tool()

async def get_forecast(latitude: float, longitude: float) -> str:

"""Get weather forecast for a location.

Args:

latitude: Latitude of the location

longitude: Longitude of the location

"""

# Implementation...

Step 2: Claude Desktop sends your question to Claude LLM

Tool calling is actually supported by Claude LLM and isn't a new feature introduced by MCP. According to the official Claude LLM documentation:

If you include

toolsin your API request, the model may returntool_usecontent blocks that represent the model's use of those tools. You can then run those tools using the tool input generated by the model and then optionally return results back to the model usingtool_resultcontent blocks.

The exact tool structure above will be added to the query to Claude LLM. Example code in Python:

self.anthropic.messages.create(

model="claude-3-5-sonnet-20241022",

max_tokens=1000,

messages=[{'role': 'user', 'content': 'Current weather in NYC?'}],

tools=... # tools defined as above

)

Step 3: Claude LLM analyzes the available tools and decides which ones to use

With the provided tools, the LLM determines which one is appropriate for the user's query. In this example, it recognizes that for the query "Current weather in NYC?" it needs to call get_forecast

Since this tool requires two parameters: latitude and longitude, the LLM understands that it needs to provide the coordinates for NYC, which it already knows from its training data.

The LLM will respond with something like this:

TextBlock(citations=None, text="Let me get the weather forecast for New York City. I'll use approximate coordinates for Manhattan.", type='text')

ToolUseBlock(id='toolu_***', input={'latitude': 40.7128, 'longitude': -74.006}, name='get_forecast', type='tool_use')], model='claude-3-5-sonnet-20241022', role='assistant', stop_reason='tool_use', stop_sequence=None, type='message', usage=Usage(...))

Step 4: The Claude Desktop executes the chosen tools through the MCP server

The Claude Desktop app will then utilize the protocol to query the weather API. The request will go through the Claude Desktop App, MCP Client, and MCP Server due to the protocol design.

Step 5: Weather API returns the real-time information

The response from Weather API will be returned in reverse order, first to MCP Server, then MCP Client and finally to Claude Desktop App. The payload looks similar to this:

This Afternoon:

Temperature: 72°F

Wind: 9 mph S

Forecast: A chance of rain showers. Partly sunny. High near 72, with temperatures falling to around 68 in the afternoon. South wind around 9 mph. Chance of precipitation is 30%.

---

Tonight:

Temperature: 62°F

Wind: 6 to 9 mph SE

Forecast: A chance of rain showers before 11pm, then showers and thunderstorms. Cloudy. Low around 62, with temperatures rising to around 64 overnight. Southeast wind 6 to 9 mph. Chance of precipitation is 100%. New rainfall amounts between 1 and 2 inches possible.

...

Step 6: The results are sent back to Claude LLM

Claude Desktop App will send the original query "Current weather in NYC?" along with the weather forecast payload from Step 5 to Claude LLM.

Step 7: Claude LLM formulates a natural language response, and Claude Desktop displays the final answer

Claude LLM formulates a natural language response, and Claude Desktop displays the final answer. Based on the forecast data provided, the LLM creates a friendly response, which is then displayed on the Desktop App:

Here's the current weather for NYC:

This Afternoon: 72°F with partly sunny skies and a 30% chance of rain showers. South winds around 9 mph.

Tonight: Expect significant weather changes with showers and thunderstorms developing (100% chance), temperatures around 62°F, and 1-2 inches of rainfall possible.

And that's the whole flow of this simple example! As you can see, it is a mechanism that standardizes and simplifies how the LLM works with external tools or resources.

Why is MCP needed?

MCP is so simple that you almost don't need one to orchestrate tool calling. However, it helps standardize resources, and the community has quickly developed many useful MCP servers. There are literally hundreds of them ready for use, such as GitHub, Jira, Slack, Cloudflare, Blender, etc., and the list keeps growing. You can simply add these to your Claude Desktop App or any host (like an IDE or chat client) to extend its capabilities!

Besides the tools or functions used in this simple example, MCP also supports other capabilities:

- Resources: File-like data that can be read by clients (like API responses or file contents)

- Tools: Functions that can be called by the LLM (with user approval)

- Prompts: Pre-written templates that help users accomplish specific tasks

Final thoughts

The MCP ecosystem is quite new, but there's definitely a lot of excitement surrounding it. It will mature further as developers rush to build innovative applications with it.

While MCP significantly enhances AI capabilities, it also brings a new wave of security threats, which we have already addressed in another article.

KA Nguyen

Co-founder & CTO

As CodeLink's CTO, KA fosters innovation and personal development through curiosity-driven leadership. He excels in building and leading engineering teams, encouraging continuous learning and development. Currently, KA is developing machine learning models to address practical challenges, aiming to create impactful societal solutions. His leadership fosters a positive team environment marked by respect and collaboration.